I collaborated with the Operations team as an independent contributor on an internal data transformation platform to deliver complete accurate, and timely data across all the Bank's CIB Division — front-to-back office data architecture and structure management.

The platforms aim was to provide a structured clean source for all data — provide self service as part of one-stop-shop risk data access — front running regulatory pressures — eliminate manual workflows—while handling trillions of calculations computed daily.

— Fragmentation, Ambiguity, and Pressure to Deliver Trust

By 2020, Deutsche Bank CIB was under pressure to meet BCBS 239 principles, with regulators highlighting gaps in risk data aggregation. Over €13 billion in compliance-related costs were reported between 2016–2020 — with data governance a recurring challenge across audits.

The bank managed over 7,000 applications globally, many legacy or siloed, complicating data quality and traceability. In response, it launched the Enterprise Risk Data Architecture (ERDA) program and consolidated 45% of critical data flows by mid-2020.

— The Challenge

When Finding Data Means Finding the Right Person

Deutsche Bank's Capital Control Operations team faced a fundamental challenge: navigating an enterprise data landscape of over 7,000 disparate applications, many siloed, duplicated, or undocumented.

Data producers and consumers across the Corporate & Investment Bank (CIB) — from risk managers and regulatory reporting teams to business analysts and operations — had no consistent way to discover, trust, or validate the data underpinning decisions and compliance.

- • Tribal knowledge

- • Ad hoc spreadsheets and file shares

- • Manual data onboarding across email chains

- • Local logic undocumented beyond individual desks

“On a daily basis I have to use and source data from many systems. Too many things have to be done manually.” — Risk Managers, Risk Wide

This wasn't just inefficient—it was risky. Regulatory programs like BCBS 239, CCAR, and FRTB demanded auditable, reliable, and transparent data pipelines.

As Deutsche Bank adopted Cloud infrastructure, the need for federated governance, consistent onboarding, and lineage visibility became a critical strategic enabler—not a side task.

— Our Approach

Co-Designing a Data Service for the Real World

The team took a dual-track agile approach, building and testing the service platform iteratively while uncovering gaps in process and ownership across the data lifecycle.

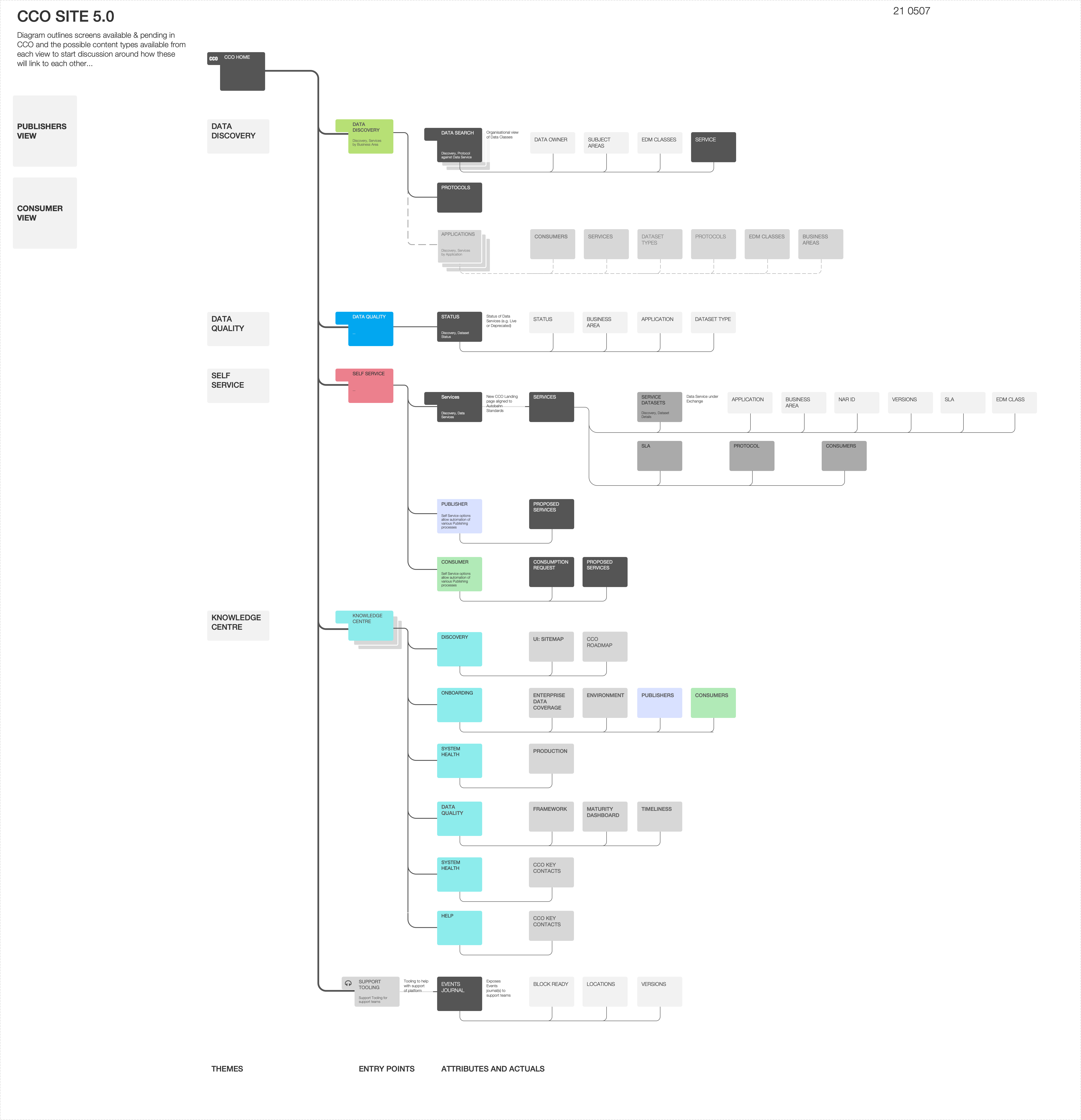

Initial skecth attempting to rationalise data holistically and start mapping platform structure

Key methods included

- • Mapping Workshops with publisher and consumer teams to identify friction points

- • Journey walkthroughs of onboarding, governance, and issue management

- • Collaborative prototyping within 2'week design and delivery sprints

- • Story development in JIRA with integrated wireframes and usage annotations

Workshop with Onboarding Leadership, Business Analyst & Product Owner sketching existing publisher workflow—detailing all upstream & downstream touchpoints, dependencies.

Each sprint followed a structured arc

- • User stories co-written by service owners and designers

- • Testable wireframes reviewed and iterated before backlog commitment

- • UI demos delivered biweekly to stakeholders

- • Portfolio'level progress shared in cadenced steering sessions

— Solution Overview

Five Epics. One Platform. Zero Guesswork

The final platform was structured to deliver value through five defined epics, each tied to concrete functionality and measurable outcomes:

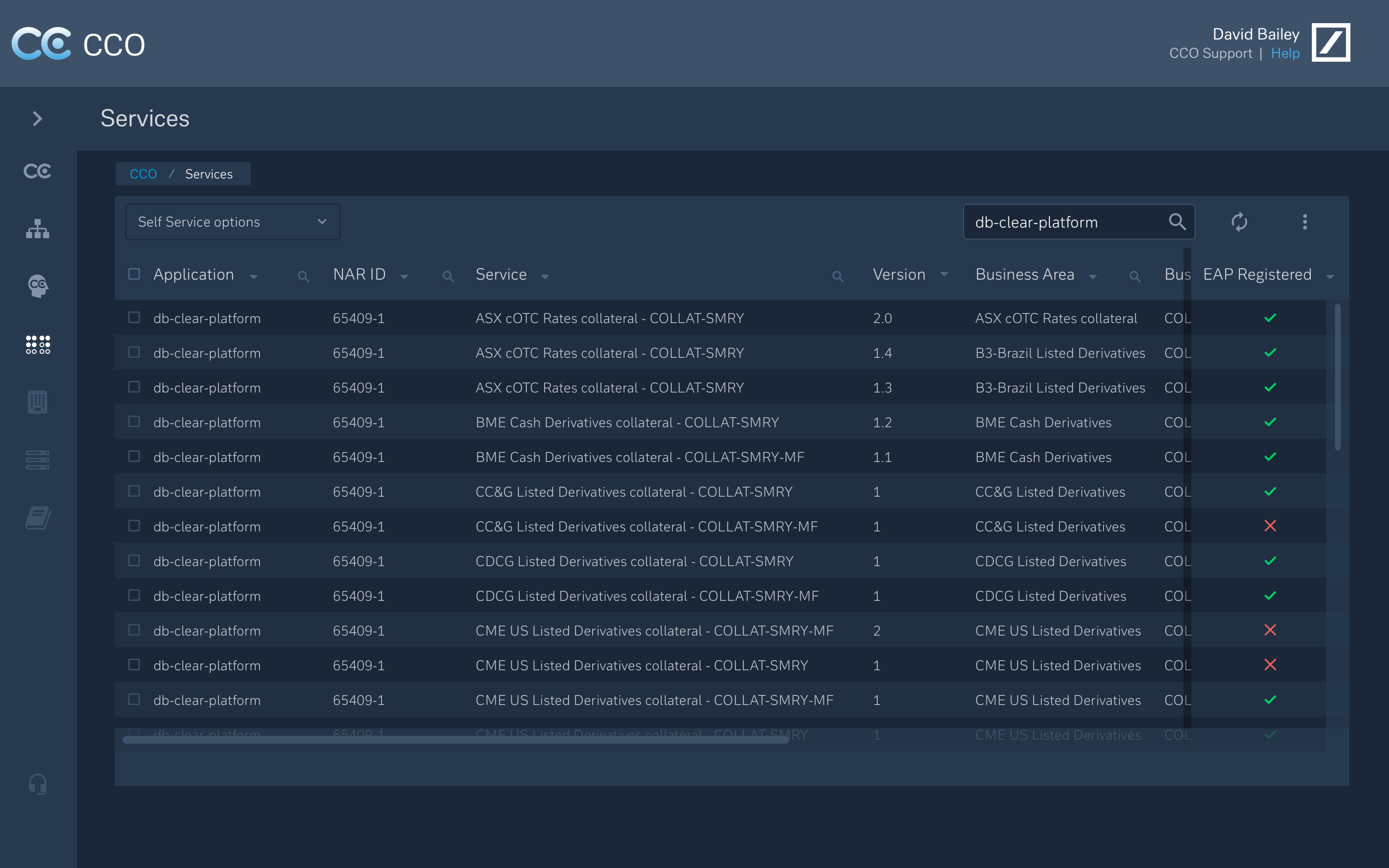

CCO as a Data Service

A modular platform enabling publishers and consumers to interact via defined SLAs, service quality indicators, and lineage tracking.

Self-Service Onboarding

Step-by-step guided flows for new data sources and consumers, eliminating manual Excel-based coordination.

SLA Transparency and Governance

Real-time views of data service maturity, service ratings, exception volumes, and lineage exposure — visualised via interactive dashboards.

Search & Discovery

Context-rich exploration of the enterprise data model, enabling navigation by business unit, product, or data attribute.

Data Model & Lineage Visualisation

“A significant focus of the design work was on how to visualise complex ownership structures and the relationships between data services, controls, and business entities..” — Senior UX Architect, CCO Delivery

Concepts created to visualise the Enterprise Data Model as an interactive structured search.

- • Explored multiple layout models (grid, mosaic, tree) to represent complex data ownership

- • Prototypes tested how users navigate from product to service to data field

- • Supported traceability and transparency — helping users evaluate completeness and lineage

Together, these components formed a service experience that was auditable, accessible, and usable — whether the user was a cloud engineer onboarding APIs or a risk analyst tracing market risk submissions.

— Design Decisions

Navigating Density, Scale, and Trust

The design challenge was not just interface usability — it was information trustability at scale

Visual Model Selection

Initial concepts used tree-style diagrams, which provided clear hierarchy but became unreadable at scale.

A mosaic model with visual heat-maps and layered abstraction proved more performant and cognitively digestible.

Governance at a Glance

The UI emphasised

- • Service Maturity Scores

- • SLA Ratings

- • Issue Volume Visualisations

These helped users assess “Can I trust this data?” without deep technical interrogation.

Search-First Architecture

The UI emphasised

Search behaviour varied by user: some queried by domain, others by protocol or product.

We designed for entry-agnostic exploration, surfacing adjacent results and lineage by default to enable contextual understanding.

Fidelity Planning & Testing

Designs were tested at low, medium, and high fidelity throughout sprints. Each flow — e.g., onboarding a new consumer or viewing service impact — was validated for:

- • Clarity

- • Accessibility

- • Error tolerance

Where possible, prototypes were shared in local environments for hands-on validation before code.

— Metrics & Outcomes

Delivery Over 19 Months

While this program was not intended to directly reduce time-to-task metrics (like hours saved), it transformed key capabilities across onboarding, governance, and discovery.

Summary Statistics

- • 19 months continuous delivery

- • 1 initiative under CCO umbrella

- • 8 epics across design and engineering

- • 70+ stories implemented

- • Biweekly demos across squads and leadership

| Area | Before | After |

|---|---|---|

| Data Find-ability | ~Relied on internal networks and shared drives | Searchable, federated catalog with lineage |

| Governance Visibility | ~Quarterly audits | Live dashboards with maturity/SLA visibility |

| Exception Management | ~Ad hoc email chains | Structured escalation and resolution flows |

| Service Communication | ~Tribal knowledge | Defined roles and service communication paths |

— Strategic Impact

Embedding Trust, Not Just Tools

The CCO platform now functions as a scaffold for strategic governance across the CIB division, embedded into the broader Deutsche Bank cloud and data infrastructure roadmap.

It creates a baseline for

- • Future regulatory alignment (BCBS 239, FRTB)

- • Federated domain ownership models

- • Auditable workflows across internal and third-party data

Most importantly, the program didn't just change systems — it changed how people interact with data, communicate across teams, and participate in ownership.

— Senior UX Architect, CCO Delivery

- “CCO is now something that can be used, governed, and built on.”

Most importantly, the program didn't just change systems — it changed how people interact with data, communicate across teams, and participate in ownership.